Hi 👋

Hi I am Tejaswi. These are my notes.

I am broadly intersted in systems, networking, linux and math. These notes form markers as I explore these realms.

0. Preface

-

This is a simple collection of some of my notes. Some parts are incomplete, I’ll mark them with a “WIP“ tag.

-

No license. I am doing this for myself. If you find it helpful, that is great.

-

Please send any feedback/errors to tteja2010 at gmail dot com .

-

I went through the Linux code and then later read/understood OS concepts. My way of looking at OS related topics is hence biased towards Linux, which may make my notes weird/unnatural.

-

I like my notes to be very simple. If I were to forget the past couple of years of my life due to an accident, I should still be able to catch up by going through my notes (Assuming I discover that I had written these notes) That is how simple it should be. I don’t want to assume anything about the reader (my amnesiac self who just completed his bachelors) while writing them.

Linux Networking Notes

This subsection contains my notes on the networking subsystem.

Before we jump in, notes on how packets are represented in Linux and visualizing their processing.

N.0.1 Packet Representation in Linux

Packets are represented using sk_buff (socket buffer) in Linux. The struct is declared in include/linux/skbuff.h. I will call a packet as skb interchangeably from now on. The sk_buff struct contains two parts, the packet data and it’s meta data.

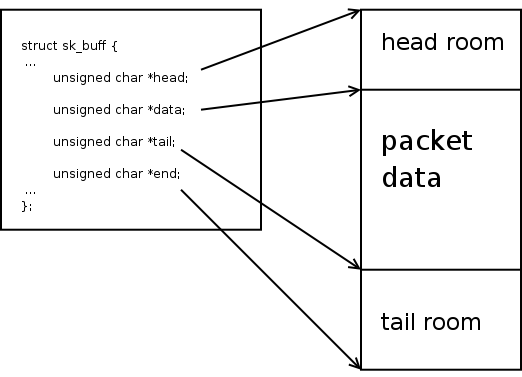

Firstly it contains pointers to the actual data. The actual packet with Ethernet, IP, transport headers and payload that has made it’s way over the network will be put in some memory that is allocated. The simplest way this is done is to allocate a contiguous memory block which will contain the whole packet. (We will see in later pages how very large packets can be created using lists of such blocks or how number of data copies can be reduced by having multiple small chunks of data.) skb->head points to the start of the this block, and skb->end points to the end of this block. The whole block need not contain meaningful data. skb->data points to the place where the packet data starts, and skb->tail points to the place where the packet data ends. This allows the packet to have some head room and tail room if the packet needs to expand. These four pointers are used to point to the actual data. They are placed at the end of the sk_buff struct. David Miller’s page on skb_data describes skb data in greater detail.

An image from the above page:

Additionally the skb contains lots of meta data. Without checking the actual data, a fully filled skb can provide the protocol information, checksum details, it’s corresponding socket, etc. The meta data is information that is extracted from the packet data or information attached to the current packet that can be used by all the layers. A few of these fields are explained in David Miller’s page How SKBs work.

This is similar to how photographs are saved. One part is the actual image, the second part is meta data like it’s dimensions, ISO, aperture, camera model, location information, etc. The meta data by itself is not useful but adds detail to the original data.

I’ll add a page which describes the skb and it’s fields in greater detail. TODO.

N.0.2 Visualizing Packet Processing

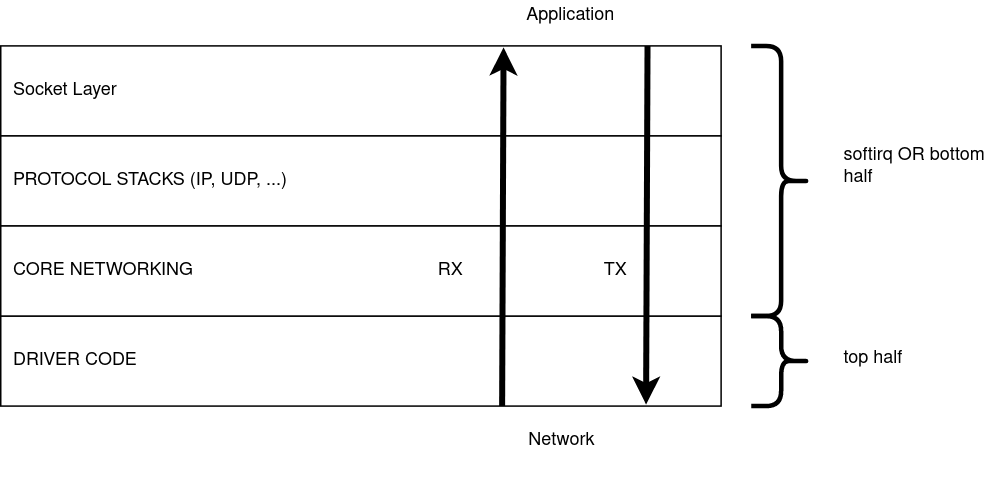

This is not a standard way of visualizing, but I think this is the right way to visualize packet processing and cant visualize in any other way. Receiving packets is in the bottom to top direction. And transmitting packets is in the top to bottom direction. Forwarding to a different layer is left to right. While receiving packets, drivers receive data first. The bottom most layer where the drivers stay. The drivers hand over the packet to the core network. The core networking code then passes it over to the right protocol stack(s). After the protocol stack processing is done, it enters socket layer, from where the user picks up the packet.

N.0.2.1 Top half and bottom half processing

The path from the driver to the socket queue is divided into two halves.

The top half happens first, the driver gets the raw packet and creates a skb. After an skb is created it calls functions to hand it over to the core networking code. The top half before exiting schedules the bottom half. Top half runs per packet and exits.

The bottom half begins picking up each packet and starts processing them. The packet is passed trough IP, UDP stacks and finally enqueues it into the socket queue. This is done for a bunch of packets. If there are packets that are still pending, the bottom half schedules itself and exits.

IMPORTANT:

- The top half is below the bottom half in my figure.

- I can use bottom half OR softirq processing interchangeably.

- Softirq processing done while receiving packets is also called NET_RX processing. I can use this as well. :)

- Core network code runs in both these halves. But most of it is in softirq processing.

With this, basic information we can start describing the RX and TX processing paths.

N1 Setup Qemu

These notes are based on the following videos:

- Running an external custom kernel in Fedora 32 under QEMU: Kernel Debugging Part 1 I am more used to Ubuntu, so I chose it instead.

- GDB on the Linux Kernel.

The script below is the same as the one described in these videos.

Please watch both the videos before continuing further.

Like described in the article on UML Setup, I like to learn by running a VM and attaching it via GDB. KVM and QEMU are the newer and well supported VM solutions. This page is a tutorial on how to launch a debug instance in QEMU and attach to it using GDB.

Install QEMU (check Qemu download page for distribution specific instructions).

N1.1 Setup & build a kernel with debug symbols

Clone the kernel from kernel git repo.

git clone git://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git linux

cd linux

Next run “make menuconfig” to modify a few configurations. On running the command a ncurses interface to enable/disable options will open. Use the arrow keys to move between options and ‘Y’ and ‘N’ keys to include/exclude options. First enable CONFIG_DEBUG_KERNEL. It is located under “Kernel hacking” as “Kernel debugging”. Next disable RANDOMIZE_MEMORY_PHYSICAL_PADDING. It is located under “Processor type and features” as “Randomize the kernel memory sections”. After making the changes save the changes and exit the menuconfig interface. A lot of youtube videos explain the same in detail, check them if confused. To reset any changes delete the “.config” file and start over by running “make defconfig”. Finally run make. It will take some to compile the kernel. Meanwhile move to the next step and install a VM in qemu.

make defconfig

make menuconfig # enable CONFIG_DEBUG_KERNEL,

# disable RANDOMIZE_MEMORY_PHYSICAL_PADDING

make -j1 # replace 1 with the number of CPUs that make

# should use to build the kernel.

N1.2 Create an image for QEMU

Move out of the linux directory and create an image for QEMU.

qemu-img create kernel-dev.img 20G

Next download ubuntu’s server iso image from here. It is a ~1GB file which can be used for a Linux server. You can alternatively install the desktop version if you are more comfortable with a GUI. Move the downloaded iso file into the same directory. Finally save the script below as start.sh.

#!/bin/bash

#startup.sh

KERNEL="linux/arch/x86_64/boot/bzImage"

RAM=1G

CPUS=2

DISK="kernel-dev.img"

if [ $# -eq 1 ]

then

qemu-system-x86_64 \

-enable-kvm \

-smp $CPUS \

-drive file=$DISK,format=raw,index=0,media=disk \

-m $RAM \

-serial stdio \

-drive file=$1,index=2,media=cdrom ## comment to run vanilla install

# use this option to boot using a cd iso

else

qemu-system-x86_64 \

-enable-kvm \

-smp $CPUS \

-drive file=$DISK,format=raw,index=0,media=disk \

-m $RAM \

-serial stdio \

-kernel $KERNEL \

-initrd initrd.img \

-S -s \

-cpu host \

-append "root=/dev/mapper/ubuntu--vg-ubuntu--lv ro nokaslr" \

-net user,hostfwd=tcp::5555-:22 -net nic \

# use this option to run debug kernel

# see the video 1 on how to pull the initrd.img

# the "root=/dev/ ..." command needs to pulled from grub.cfg (see video 1)

fi

The above script when given an ISO file passes it as an CD to the QEMU instance. This way we can install ubuntu into “kernel-dev.img”.

If no arguments are provided it tries to run the OS installed on kernel-dev.img. This way we can use the script to start the VM after we have completed installing ubuntu. At this point the directory structure should look like this:

.

├── kernel-dev.img

├── linux

├── ubuntu-21.04-live-server-amd64.iso

└── startup.sh

First to install ubuntu run: (if superuser privileges needed, run with sudo)

./startup.sh ubuntu-21.04-live-server-amd64.iso

Go through all the steps and install ubuntu. A lot of YouTube videos show the complete process. Use them as a reference if necessary.

Once the installation is complete, comment out the line which provides the cdrom option to boot into an vanilla ubuntu install.

./startup.sh ubuntu-21.04-live-server-amd64.iso #cdrom line commented

Now wait for the kernel compilation to complete. Then run the script without the arguments to boot into the kernel we built.

./startup.sh

The boot will wait for GDB to connect. On a separate terminal run:

gdb linux/vmlinux

Within the gdb prompt then run “target remote :1234” to connect to QEMU. The bt command should then show some stack within QEMU.

Run “hbreak start_kernel” to add a hardware breakpoint at start_kernel() and then run “continue”. The VM would then begin booting and will stop in the start_kernel function.

Add other hardware breakpoints (since QEMU uses hardware acceleration for Virtualization, normal SW breakpoints will not work) and start tinkering. The network options are similar to those of UML. Thses options have been commented in the above script.

N1.3 Network setup

By default the script provides an interface via which the VM can access both internet and the host machine (over ssh). This is the SLIRP networking mode. Follow the link here to read more.

The interface will be created. If the interface does not have an address, run dhclient on the interface so it is assigned an address. Next install an sshserver, if not installed during ubuntu installation, so we can access the guest over ssh.

sudo dhclient eth0 # provide the right interface name.

sudo apt update # needed to update apt cache.

sudo apt install openssh-server

Finally to login into the guest machine, run:

ssh -p 5555 localhost

N1. Setup UML (older)

SKIP THIS IF YOU WERE ABLE TO SETUP QEMU. This page is here only for completeness, but is not necessary to experiment with a kernel.

I prefer to learn/tinker with linux using UML. UML is User mode linux, which is a simple VM. It emulates a uniprocessor linux system, and can run even on machines with very old hardware (like my laptop). Check the UML homepage for additional details. This page is just to a simple tutorial on how to build and run it. I have also added sections on how to attach it to GDB for easy debugging and a section on how to setup basic networking between multiple UMLs which you can skip in the first read.

N0.1 Clone and build the kernel

Clone the kernel, and build it. Make defconfig sets up the default kernel config. The kernel config is a set of kernel features which will either be compiled into the kernel or will be compiled as modules. The architecture for which we are configuring is the UM (user mode) architechture. While building the config, you may be prompted to choose a configuration. Press enter to choose the default configuration. Once the configuration is done, a “.config” file will be populated with the chosen options. Begin compiling the code. Based on your machine’s CPU capabities, replace 1 with the number of parallel compilation jobs you want make to run. Please remain patient as the very first compilation will take some time.

git clone git://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git linux

cd linux

make defconfig ARCH=um

make -j1 ARCH=um

A new file “linux” will be created. This is the UML executable which runs as an application on the host machine. It needs a few other files and arguments to run, which will be explained in the next section.

N0.1.1 Kernel config [OPTIONAL]

The kernel src includes a neat way to configure the kernel. Run make menuconfig to configure various options. A ncurses interface should start up, with the instructions provided in the top. Pressing Y includes a config and N excludes a config. After configuring the kernel, save and exit the config. The .config file will get updated with the necessary configuration. To build the uml binary with debug symbols, edit KBUILD_HOSTCFLAGS in the Makefile. Just add a ‘-g’ option at the end. The kernel can then be rebuilt with the new configuration.

make menuconfig ARCH=um

make -j1 ARCH=um

Makefile diff:

HOSTCC = gcc

HOSTCXX = g++

-KBUILD_HOSTCFLAGS := -Wall -Wmissing-prototypes -Wstrict-prototypes -O2 \

+KBUILD_HOSTCFLAGS := -Wall -Wmissing-prototypes -Wstrict-prototypes -O2 -g \

-fomit-frame-pointer -std=gnu89 $(HOST_LFS_CFLAGS) \

$(HOSTCFLAGS)

KBUILD_HOSTCXXFLAGS := -O2 $(HOST_LFS_CFLAGS) $(HOSTCXXFLAGS)

N0.2 Rootfs setup

We have a compiled kernel but we still need a init script and other userspace programs. We need a rootfs to emulate the disk. There are a lot of blogs which describe how to build a rootfs. We will download a debian rootfs that is provided by google. Run the following command to download the rootfs and uncompress it. On uncompressing it a 1GiB net_test.rootfs.20150203 should be available.

wget -nv https://dl.google.com/dl/android/net_test.rootfs.20150203.xz

unxz net_test.rootfs.20150203.xz

Note: If the above download does not work, check the Google nettest script where the rootfs link can be found. Google uses UML to run nettests on the kernels OEMs ship to check for possible bugs.

We now have everything needed to run the UML.

.2.1 Adding files/programs to rootfs [OPTIONAL]

The rootfs now contains certain programs and a init script which can be used to boot into the UML. We may need to install programs for our testing or need to move files between UML and the host OS. By mounting it into a directory the rootfs contents can be accessed.

mkdir temp

sudo mount net_test.rootfs.20150203 temp

Copying from/to the directory is equivalent to copying files from/to the UML. In the below example I am copying my tmux configuration files into the rootfs. When I boot into UML, I will find the config file in the home directory. (Super user privilages are needed to add/remove files from the rootfs).

sudo cp ~/.tmux.conf temp/home/

By chroot-ing into the mounted directory, any necessary programs can be installed. On running chroot, you will able to edit the rootfs with super user privilages. I am installing tmux in the below code and then exiting the chroot shell.

sudo chroot temp

apt-get update

apt-get install tmux

exit

Once all the changes are done, unmount the rootfs.

sudo umount temp

0.2.1.1 apt-get is failing

Note: apt-get update may fail printing the following error:

Err http://ftp.jp.debian.org wheezy Release.gpg

Connection failed

If the /etc/hosts file contains an address for a particular hostname, the system will not do an additional DNS lookup. In this case, the address corresponding to ftp.jp.debian.org is incorrect, causing apt-get to fail. Run the command “dig ftp.jp.debian.org” in the host machine (not within chroot) to get the right address. Update the IP address to “133.5.166.3”. Finally /etc/hosts should contain the following entries:

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

133.5.166.3 ftp.jp.debian.org

0.2.1.2 apt-get is still failing !!!

OK, dont worry, download my rootfs from my github repo here.

0.3 Take it for a spin

Finally if all this works out you are ready to start a UML instance. Just run the following command, where you provide path to rootfs as the value against “ubda”, and 256MiB as the RAM. DO NOT forget the “M” in 256M, else UML will try to boot with just to 256Bytes of RAM, and fail :). I usually provide 256MiB RAM, you can go as low as 100 or 50MiB.

./linux ubda=net_test.rootfs.20150203 mem=256M

You will see the VM booting, printing dmesg, bringing up the various kernel susbsystems. Finally when a promt to enter the password appears, enter root as the password. Play around with the tiny VM. When the fun ends, run “halt” to shutdown UML.

0.4 Attach GDB

It is fun to add breakpoints and view specific code in GDB. To do this, we have to first find the process id (PID) of the main UML process. Run the following command to find the UML pid. The output should show multiple PIDs

$ ps aux | grep ubda

0 t teja 27089 12160 2 80 0 - 17629 ptrace 16:52 pts/6 00:00:18 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 S teja 27094 27089 0 80 0 - 17629 read_e 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 S teja 27095 27089 0 80 0 - 17629 poll_s 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 S teja 27096 27089 0 80 0 - 17629 poll_s 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 27097 27089 0 80 0 - 5206 ptrace 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 27288 27089 0 80 0 - 5339 ptrace 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 27352 27089 0 80 0 - 4990 ptrace 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 27353 27089 0 80 0 - 5294 ptrace 16:52 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 29405 27089 0 80 0 - 5003 ptrace 16:53 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 29408 27089 0 80 0 - 5390 ptrace 16:53 pts/6 00:00:00 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

1 t teja 29410 27089 1 80 0 - 5717 ptrace 16:53 pts/6 00:00:08 ./linux ubda=../rootfs_pool/net_test.rootfs.20150203 mem=50M

0 S teja 30278 3682 0 80 0 - 5500 pipe_w 17:05 pts/1 00:00:00 grep --color=auto ubda

The third column is the process id and the fourth column is the parent PID. In the above output PID 27089 starts and then spawns the other threads. Attach gdb to the the main parent thread, which is 27089 in the above example.

sudo gdb -p 27089

GDB will read the symbols from linux and attach itself. Play around, check the backtrace, etc. All globals are now accessable.

0.5 A private UML subnet

In most cases we want to play around with two UMLs connected to each other and ignorant of the rest of the world. In such cases, the simplest way is to connect them using the mcast transport. Make copies of the rootfs, for each UML instance. In this case have two copies ready, and run them with one additional argument “eth0”. This will add an additional eth0 interface. We set eth0 to mcast. i.e. the eth0 interfaces in both the instances are connected over mcast.

./linux ubda=net_test.rootfs.20150203_1 mem=256M eth0=mcast

./linux ubda=net_test.rootfs.20150203_2 mem=256M eth0=mcast

Assign addresses to the eth0 interfaces, and they are ready. You try pinging the other UML. Command to assign address is:

ip address add 192.168.1.1/24 dev eth0

The full mcast readme is here. It contains details to create more complex mcast networks.

On assigning mcast to a eth device, each UML instance opens sockets which listen to multicast traffic. If a multicast address is not assigned (like above), all the instances listen to 239.192.168.1 . All packets are received and then filtered out based on destination MAC address. The packets can even be seen in packetdumps collected in the host OS. Quoting from the above readme, “It’s basically like an ethernet where all machines have promiscuous mode turned on”. Bad for performance, but very easy to setup.

N2. Packet RX path 1 : Basic

This page describes the code flow while receiving a packet. It begins with the packet just entering the core networking code, top half and bottom half processing, basic flow through the IP and UDP layers and finally the packet is enqueued into a socket queue.

Additionally hash based software packet steering across CPUs (RPS and RSS), Inter Processor Interrupts (IPI) and scheduling softirq processing is described. They can be ignored in the first read and can be revisited in later runs after gaining additional context. These sections have been marked OPTIONAL. NAPI will be described in later pages, all NAPI APIs are ignored now.

N2.1 Enter the Core, top half processing

We assume that the driver has already picked up the packet data and has created a skb. (We will look at how drivers create skbs in the page describing NAPI). This packet needs to be sent up to the core. The kernel provides two simple function calls to do this.

netif_rx()

netif_rx_ni()

netif_rx() does two things,

-

Enqueue the packet into a queue which contains packets that need processing. The kernel maintains a softnet_data structure for each CPU. It is the core structure that facilitates network processing. Each softnet_data struct contains a “input_pkt_queue” into which packets that need to be processed will be enqueued. This queue is protected by a spinlock that is part of the queue (calls to rps_lock() and rps_unlock() are to lock/unlock the spinlock). The input_pkt_queue is of type sk_buff_head, which is used within by kernel to represent skb lists. Before enqueuing, if the queue length is more than

netdev_max_backlog(whose default length is 1000), the packet is dropped. This value can be modified by changing/proc/sys/net/core/netdev_max_backlog. For each each packet drop sd->dropped is incremented. Certain numbers are maintained by softnet_data, I’ll add a page describing the struct. TODO -

After successfully enqueueing the packet, netif_rx schedules softirq processing if it has not already been scheduled. The next section describes how softirq is scheduled.

Parts of the code have been added below. All the core networking functions are described in net/core/dev.c.

netif_rx()

{

netif_rx_internal()

{

enqueue_to_backlog()

{

// checks on queue length

rps_lock(sd);

__skb_queue_tail(&sd->input_pkt_queue, skb);

rps_unlock(sd);

____napi_schedule(sd, &sd->backlog)

{

// if not scheduled already schedule softirq

__raise_softirq_irqoff(NET_RX_SOFTIRQ);

}

}

}

}

netif_rx() and netif_rx_ni() are very similar except the later additionally begins softirq processing immediately, which will be explained in subsequent section.

This ends the top half processing, bottom half was scheduled, which will undertake rest of the packet processing.

N2.2 Softirqs, Softirq Scheduling [OPTIONAL]

One detail that I have ignored in the previous discussion is to specify which CPU the top and bottom half actually run.

The top half runs in the hardware interrupt (IRQ) context, which is a kernel thread which can be on any CPU. (This description is not really true, I have a separate page planned on interrupts where this will be described in more detail. Till then this way of visualizing it is not really wrong). Say it runs on CPU0, i.e. netif_rx() is called on CPU0. The packet will be enqueued onto CPU0’s input_packet_queue. The kernel thread will then schedule softirq processing on CPU0 and exit. The bottom half will also run on the same CPU. This section describes how the bottom half is scheduled by the top half and how softirq begins.

Just Google what hard interrupts (IRQ) and soft interrupts (softirq) are for some background. Hardware interrupts (IRQs) will stop all processing. For interrupts that take very long to run, some work is done in IRQ and the rest is done in a softirq. Packet processing is one such task that takes very long to complete so NET_RX is the corresponding soft interrupt which takes care of packet processing.

Linux currently has the following softirqs declared in include/linux/interrupt.h

enum

{

HI_SOFTIRQ=0,

TIMER_SOFTIRQ,

NET_TX_SOFTIRQ,

NET_RX_SOFTIRQ,

BLOCK_SOFTIRQ,

IRQ_POLL_SOFTIRQ,

TASKLET_SOFTIRQ,

SCHED_SOFTIRQ,

HRTIMER_SOFTIRQ, /* Unused */

RCU_SOFTIRQ,

NR_SOFTIRQS

};

The names are mostly indicative of the subsystem each softirq serves. During kernel initialization, a function is registered for each of these softirqs. When softirq processing is needed, this function is called.

For example after core networking init is done, net_dev_init() registers net_rx_action and net_tx_action as the functions corresponding to NET_RX and NET_TX.

open_softirq(NET_TX_SOFTIRQ, net_tx_action);

open_softirq(NET_RX_SOFTIRQ, net_rx_action);

A softirq is scheduled by calling raise_softirq(), which internally disables irqs and calls __raise_softirq_irqoff(). This function sets a bit corresponding to the softirq in the percpu bitmask irq_stat.__softirq_pending. The bitmask is used to track all pending softirqs on the CPU. This operation must be done with all interrupts are disabled on the CPU. Without interrupts disabled, the same bitmask can be overwritten by another interrupt handler, undoing the current change.

Grepping for ksoftirqd in ps, should show multiple threads, one for each CPU. These are threads spawned during init to process pending softirqs on each of the CPUs. Periodically the scheduler will allow ksoftirqd to run, and if any of the bits are set, it’s registered function is called.

During packet RX, net_rx_action() is called.

The CFS scheduler makes sure that all threads get their fair share of CPU time. So ksoftirqd and an application thread will both get their fair share. But, during softirq processing, ksoftirqd disables all irqs and the scheduler has no way of interrupting the thread. Hence all registered functions are have checks to prevent the ksoftirqd from high-jacking the CPU for too long.

In this case, a buggy net_rx_action() function may be able to push packets into the socket queue but the application never will never get a chance to actually read the packets.

To conclude, netif_rx() calls __raise_softirq_irqoff(NET_RX_SOFTIRQ) to schedule softirq processing on the current CPU. The ksoftirqd running on the current CPU will check the bitmask, since NET_RX_SOFTIRQ is pending will call net_rx_action()

N2.2.1 Run Softirq Now

Other than softirq being scheduled by the scheduler, it is sometimes necessary to kick start processing. For example when a sudden burst of packets arrive, due to delays in softirq processing, packets might be dropped. In such cases, when a burst is detected, kick-starting packet processing is helpful. In the above example, calling netif_rx_ni() will kick start packet processing, in addition to enqueuing the packet .

N2.3 Packet Steering (RSS and RPS) [OPTIONAL]

RSS and RPS are techniques that help with scaling packet processing across multiple CPUs. They allow distribution of packet processing across CPUs, while restricting a flow to a single CPU. i.e. each flow is assigned a CPU and flows are distributed across CPUs.

N2.3.1 Packet flow hash

Firstly To identify packet flows, a hash is computed based on the following 4-tuple.

(source address, destination address, source port, destination port).

For certain protocols that do not support ports, a tuple containing just the source and destination addresses is used to compute the hash.

The hash is computed in __skb_get_hash(). After computing the hash, it is updated in skb->hash.

Some drivers have the hardware to offload hash computation, which is then set by the driver before passing the packet to the core networking layer.

N2.3.2 RSS: Receive Side Scaling

RSS acheives packet steering by configuring receive queues (usually one for each CPU), and by configuring seperate interrupts for each queue and pinning the interrupts to the specific CPU. On receiving a packet, based on it’s hash the packet is put in the right queue and the corresponding interrupt is raised.

N2.3.3 RPS: Receive Packet Steering

RPS is RSS in software. While pushing the packet into the core network through netif_rx() or netif_receive_skb(), a CPU is chosen for the packet based on the skb->hash. The packet is then enqueued into the target CPU’s input_packet_queue. Since the operation must have all interrupts disabled, a softirq cannot be directly scheduled on different core. So an Inter Processor Interrupt is used to schedule softirq processing on the other core.

After RSS decides to put the packet on a remote core, in the rps_ipi_queued() function, the target CPU’s softnet struct is added to the current core’s sd->rps_ipi_next which is a list to softnet structs for which an IPI has to be sent. During the current core’s softirq processing, all the accumulated IPIs are sent to those cores by traversing the rps_ipi_next list.

IPIs are actually sent by scheduling a job on a remote core by calling smp_call_function_single_async() during NET_RX processing.

netif_rx_internal(skb)

{

cpu = get_rps_cpu(skb->dev, skb, &rflow);

enqueue_to_backlog(skb, cpu)

{

sd = &per_cpu(softnet_data, cpu); //get remote cpu sd

__skb_queue_tail(&sd->input_pkt_queue, skb); //enqueue

rps_ipi_queued(sd); //add sd to rps_ipi_next

}

}

Kernel documentation describes these methods and also provides instructions to configure them.

N2.4 Softirq processing NET_RX

Softirq processing was scheduled by the bottom half, and NET_RX begins. All of NET_RX processing is done in net_rx_action(). The logic is to process packets until one of the following events occurs:

- The packet queue is empty. In this case NET_RX softirq stops.

- NET_RX has been running for longer than

netdev_budget_usecs, whose default value is 2 milliseconds. - NET_RX has processed more than

netdev_budget(fixed value of 300) packets. (We will revist this constraint while looking at NAPI)

In cases 2 and 3, there still might be packets to process, in which case NET_RX will schedule itself before exiting, (i.e. set the NET_RX_SOFTIRQ bit before exiting), so it can process some more packets in another session. In cases 2 and 3 NET_RX processing is almost at it’s limits. To indicate this sd->time_squeeze is incremented, so that a few parameters can be tuned. We will revisit this while discussing NAPI.

Softirq processing is done with elevated privilages, which can easily cause it to high-jack the complete CPU. The above constraints are to make sure that softirq processing allows the applications run. If the softirq were to high-jack the CPU, the user application would never run, and the end user would see applications not responding.

The actual function that dequeues packets from input_pkt_queue and begins processing them is process_backlog(). After dequeueing the packet it calls __netif_receive_skb() which pushes the packet up into the protocol stacks.

For now ignore the napi part of net_rx_action(), it calls napi_poll() which will call the registered poll function n->poll(). The poll function is set to process_backlog. For now believe me even if it does not make much sense. It will make sense one we look at the NAPI framework.

net_rx_action()

{

unsigned long time_limit = jiffies +

usecs_to_jiffies(netdev_budget_usecs);

int budget = netdev_budget;

budget -= napi_poll(n, &repoll)

{

work = n->poll(n, weight) // same as process_backlog

process_backlog(n, weight)

{

skb_queue_splice_tail_init(&sd->input_pkt_queue,

&sd->process_queue);

while ((skb = __skb_dequeue(&sd->process_queue)))

__netif_receive_skb(skb);

}

}

// time and budget constraints

if (unlikely(budget <= 0 ||

time_after_eq(jiffies, time_limit))) {

sd->time_squeeze++;

break;

}

}

N2.5 __netif_receive_skb_core()

__netif_receive_skb() internally calls __netif_receive_skb_core() for the main packet processing. __netif_receive_skb_core() is large function which handles multiple ways in which a packet can be processed. This section tries to cover some of them.

-

skb timestamp:

skb->tstampfield is filled with the time at which the kernel began processing the packet. This information is used in various protocol stacks. One example is it’s usage by AF_PACKET, which is the protocol tools like wireshark use to collect packet dumps. AF_PACKET extracts the timestamp fromskb->tstampand provides to userspace as part of struct tpacket_uhdr. This timestamp is the one that wireshark reports as the time at which the packet was received. -

Increment softnet stat:

sd->processedis incremented, which is representative of the number of packets that were processed on a particular core. The packets might be dropped by the kernel for various reasons later, but they were processed on a particular core. -

packet types: At this point the packet is sent to all modules that want to process packets. The list of packet types that the kernel supports is defined in

include/linux/netdevice.h, just above PTYPE_HASH_SIZE macro definition. Other than the ones described above, promiscuous packet types (processes packets irrespective of protocol) like AF_PACKET and custom packet_types added by various drivers and subsystems are all supported. Each of them fill up a packet_type structure and register it by callingdev_add_pack(). Based on the type and netdevice the struct is added to the respective packet_type list.__netif_receive_skb()based on the skb’s protocol and netdevice traverses the particular list, delivering the packet by callingpacket_type->func(). All registered packet_types can be seen at/proc/net/ptype.

$ cat /proc/net/ptype

Type Device Function

ALL tpacket_rcv

0800 ip_rcv

0011 llc_rcv [llc]

0004 llc_rcv [llc]

0806 arp_rcv

86dd ipv6_rcv

The ptype lists are described below:

-

ptype_all: It is a global variable containing promiscuous packet_types irrespective of netdevice. Each AF_PACKET socket adds a packet_type to this list. packet_rcv() is called to pass the packet to userspace. -

skb->dev->ptype_all: Per netdevice list containing promiscuous packet_types specific to the netdevice. TODO: find an example. -

ptype_base: It is a global hash table, with key as the last 16bits ofpacket_type->typeand value as a list of packet_type. For exampleip_packet_typewill be added to ptype_base[00], withptype->funcset to ip_rcv. While traversing, based onskb->protocol’s last 16bits, a list is chosen and the packet is delivered to all packet_types whose type matches skb->protocol. -

skb->dev->ptype_specific: Per netdevice list containing protocol specific packet types. The packet is delivered to if the skb->protocol matches ptype_type. Mellanox for example adds apacket_typewith type set toETH_P_IP, to process all UDP packets received by the driver. Seemlx5e_test_loopback(). The name suggests some sort of loopback test. I am not really sure how. IDK.One important detail is that the same packet will be sent to all applicable packet_type-s. Before delivering the skb,

skb->usersis incremented.skb->usersis the number users that are (ONLY) reading the packet. Each module after completing necessary processing callkfree_skb(), which will first decrement users, and then free the skb only if skb->users hits zero. So the same skb pointer is shared by all the modules, and the last user will free the skb. -

RX handler: Drivers can register a rx handler, which will be called if a packet is received on the device. The

rx_handlercan return values based on which packet processing can stop or continue. If RX_HANDLER_CONSUMED is returned, the driver has completed processing the packet and__netif_receive_skb_core()can stop processing further. If RX_HANDLER_PASS is returned, skb processing will continue. The other values supported and ways to register/unregister a rx handler are available ininclude/linux/netdevice.h, above enum rx_handler_result. For example if the driver wants to support a custom protocol header over IP, a rx handler can be registered which will process the outer header and return RX_HANDLER_PASS. Futher IP processing can continue when the packet is delivered to ip_packet_type. Note that the packet dumps collected will still contain the custom header. (It is actually better to return RX_HANDLER_CONSUMED and enqueue the packet by calling netif_receive_skb. This will allow the driver to run the packet through GRO offload engine and to distribute packet processing with RPS. Ignore this comment for now.) -

Ingress Qdisc processing. We will look at it in a different page, after we have looking at Qdiscs and TX. Similar to RX handler, certain registered functions run on the packet and based on the return value, the processing can stop or continue. But unlike a RX handler, the functions to run are added from userspace.

The order in which the __netif_receive_skb_core() delivers (if applicable) the packets is:

- Promiscuous packet_type

- Ingress qdisc

- RX handler

- Protocol specific packet_type

Finally if if none of them consume the packet, the packet is dropped and netdevice stats are incremented.

__netif_receive_skb_core()

{

net_timestamp_check(!netdev_tstamp_prequeue, skb)

{

__net_timestamp(SKB);

}

__this_cpu_inc(softnet_data.processed);

//Promiscuous packet_type

list_for_each_entry_rcu(ptype, &ptype_all, list) {

if (pt_prev)

ret = deliver_skb(skb, pt_prev, orig_dev);

pt_prev = ptype;

}

list_for_each_entry_rcu(ptype, &skb->dev->ptype_all, list) {

if (pt_prev)

ret = deliver_skb(skb, pt_prev, orig_dev);

pt_prev = ptype;

}

//Ingress qdisc

skb = sch_handle_ingress(skb, &pt_prev, &ret, orig_dev);

//RX handler

rx_handler = rcu_dereference(skb->dev->rx_handler);

switch (rx_handler(&skb)) {

case RX_HANDLER_CONSUMED:

ret = NET_RX_SUCCESS;

goto out;

case RX_HANDLER_PASS:

break;

default:

BUG();

}

//Protocol specific packet_type

deliver_ptype_list_skb(skb, &pt_prev, orig_dev, type,

&ptype_base[ntohs(type) & PTYPE_HASH_MASK]);

deliver_ptype_list_skb(skb, &pt_prev, orig_dev, type,

&skb->dev->ptype_specific);

}

N2.6 IP Processing

N2.6 IP layer Processing

Assuming the skb is an IP packet, the skb will enter ip_rcv(), which then calls ip_rcv_core(). ip_rcv_core validates the IP header (checksum, checks on ip header length, etc), updates ip stats and based on the transport header set in the IP header will set skb->transport_header.

The protocol stacks maintain counts when packets enter and counts of the number of packets that were dropped. These numbers can be seen at /proc/net/snmp. The correspnding enum can be found at include/uapi/linux/snmp.h.

ip_rcv_core()

{

__IP_UPD_PO_STATS(net, IPSTATS_MIB_IN, skb->len);

iph = ip_hdr(skb);

if (unlikely(ip_fast_csum((u8 *)iph, iph->ihl)))

goto csum_error;

skb->transport_header = skb->network_header + iph->ihl*4;

return skb;

csum_error:

__IP_INC_STATS(net, IPSTATS_MIB_CSUMERRORS);

return NULL;

}

ip_rcv then sends the packet through the netfilter PREROUTING chain. The netfilter subsystem allows the userspace to filter/modify/drop packets based on the packet’s attributes. Tools iptables/ip6tables are used to add/remove IP/IPv6 rules. The netfilter subsystem contains 5 chains, PREROUTING, INPUT, FORWARD, OUTPUT and POSTROUTING. Each chain contains rules and corresponding actions. If a rule matches a packet, the corresponding action is taken. We will look at them in a separate page dedicated to iptables. An easy example is that it is used to act as a firewall to drop unwanted traffic.

transport layer (TCP/UDP)

ip_local_deliver_finish()

🠕 |

INPUT OUTPUT

| 🠗

ROUTING DECISION ----- FORWARDING ----- +

🠕 |

PREROUTING POSTROUTING

| 🠗

ip_rcv()

CORE NETWORKING

While receiving a packet, at the end of ip_rcv() it enters the PREROUTING chain, at the end of which it enters ip_rcv_finish(). Based on the packet’s ip address, a routing decision is taken if the packet should be locally consumed or if it is to forwarded to a different system. (I’ll describe this in more detail in a separate page). If the packet should be locally consumed ip_local_deliver() is called. The packet then enters the INPUT chain, and finally comes out at ip_local_deliver_finish().

Based on the protocol set in the IP header, the corresponding protocol handler is called. If a transport protocol is supported over IP, the corresponding handler is registered by calling inet_add_protocol().

Yes, this section skips a lot of content, I’ll add a separate sections for IP processing, netfilter (esp. nftables) and routing.

N2.7 UDP layer

If the packet is an UDP packet, udp_rcv is the protocol handler called, which internally calls __udp4_lib_rcv(). First the packet header is validated, pseudo ip checksum is checked and then if the packet is unicast, based on the port numbers the socket is looked up, and then udp_unicast_rcv_skb() is called, which then calls udp_queue_rcv_skb().

udp_queue_rcv_skb() checks if the udp socket has a registered function to handle encapsulated packets. If the handler is found the corresponding handler is called, which processes the packet further. For example in case of XFRM encapsulation xfrm4_udp_encap_rcv() is registered as the handler. (XRFM short for transform, adds support to add encrypted tunnels in the kernel).

If no encap_rcv handler is found, full udp checksum is done and __udp_queue_rcv_skb() is called. Internally it calls __udp_enqueue_schedule_skb() which checks if the sk memory is sufficient to add the packet and then calls __skb_queue_tail() to enqueue the packet into sk_receive_queue. If the application has called the recv() system call and is waiting for the packet the process moves to __TASK_STOPPED state and the scheduler no longer schedules it. sk->sk_data_ready(sk) is called so that it’s state is set to TASK_INTERRUPTIBLE, and the scheduler then schedules the application. On waking up, the packet is dequeued from the queue and the application recv()s the packet data. Receiving a packet and socket calls will be described in a separate page.

__udp4_lib_rcv()

{

uh = udp_hdr(skb);

if (udp4_csum_init(skb, uh, proto)) //pseudo ip csum

goto csum_error;

sk = __udp4_lib_lookup_skb(skb, uh->source, uh->dest, udptable);

if (sk) {

return udp_unicast_rcv_skb(sk, skb, uh)

{

ret = udp_queue_rcv_skb(sk, skb);

//continued below..

}

}

}

udp_queue_rcv_skb(sk, skb)

{

struct udp_sock *up = udp_sk(sk);

encap_rcv = READ_ONCE(up->encap_rcv);

if (encap_rcv) {

if (udp_lib_checksum_complete(skb))

goto csum_error;

ret = encap_rcv(sk, skb);

}

udp_lib_checksum_complete(skb);

return __udp_queue_rcv_skb(sk, skb)

{

rc = __udp_enqueue_schedule_skb(sk, skb)

{

rmem = atomic_read(&sk->sk_rmem_alloc);

if (rmem > sk->sk_rcvbuf)

goto drop;

__skb_queue_tail(&sk->sk_receive_queue, skb);

sk->sk_data_ready(sk);

// == sock_def_readable()

}

}

}

N2. Packet TX path 1 : Basic

This page contains the basic code flow while transmitting a packet. It begins with the userspace sendmsg, enters the UDP and IP stacks, finds a route, enters core networking and finally being handed over to the driver which pushes it out. TX, unlike RX, can happen without a softirq being raised. The processing happens completely in the application context. In this page I describe packet transmission without a softirq being raised. I’ll cover how qdiscs are used in a separate page, after which NET_TX with qdiscs will be described.

UDP & IP stack processing and routing will be described in detail in a separate page, this is just a basic overview. We will end the discussion by handling over the packet to the driver. How the driver actually transmits the packet will be described in later pages.

N3.1-3.2 sendmsg() from userspace

3.1 sendmsg()

After opening a UDP socket the application gets a fd as a handle for the underlying kernel socket. The application sends data into the socket by calling send() or sendmsg() or sendto(), all of which will send out UDP data.

On calling the sendmsg() system call, the syscall trap will save the application’s process context and switch to running the kernel code. Kernel code runs in the application context, i.e. any traces that print the pid of the process will return the application’s pid. If you do not know this, believe me for now, signals & syscalls are explained in a separate page. The kernel registers functions that are run for each system call. The registered function’s name is __do_sys_ + syscall name. In this case the function is __do_sys_sendmsg(), which internally calls __sys_sendmsg(). The arguments passed to the call are the fd, the msghdr struct and flags.

The first step is to get the kernel socket from the fd. Each fd the application holds is a handle to a kernel socket. The socket can be for an open file, a UDP socket, UNIX socket, etc. The socket is usually represented with the var sock. ___sys_sendmsg is called with the sock, msghdr and flags. It simply checks if the arguments are valid, copies the msghdr (allocated in userspace) into kernel memory and then calls sock_sendmsg().

sock_sendmsg() checks if the application is allowed to proceed. Linux has kernel modules like SELinux and AppArmour which audit each system call and based on the configured rules allow or reject the system call. If security_socket_sendmsg() does not return any errors, sock_sendmsg_nosec() is called, which internally calls the sock->ops->sendmsg(). socket ops are registered during socket creation based on the protocol family (AF_INET, AF_INET6, AF_UNIX) and socket type (SOCK_DGRAM, SOCK_STREAM). Since a udp socket is a SOCK_DGRAM socket of AF_INET family the ops registered are inet_dgram_ops, defined in net/ipv4/af_inet.c. And sock->ops->sendmsg() is inet_sendmsg().

SYSCALL_DEFINE3(sendmsg, int, fd, struct user_msghdr __user *, msg,

unsigned int, flags)

{

return __sys_sendmsg(fd, msg, flags) {

sock = sockfd_lookup_light(fd, &err, &fput_needed);

err = ___sys_sendmsg(sock, msg, &msg_sys, flags) {

//copy msg (usr mem) into msg_sys (kernel mem)

err = copy_msghdr_from_user(msg_sys, msg, NULL, &iov);

sock_sendmsg(sock, msg_sys) {

int err = security_socket_sendmsg();

if(err)

return;

sock_sendmsg_nosec(sock, msg_sys) {

sock->ops->sendmsg(sock, msg_sys);

//inet_sendmsg();

}

}

}

}

}

2.2 UDP & IP sendmsg

At this point we will begin using the networking struct sock (different from a socket), represented usually with the var sk. Each socket will either have a valid sock or a file. In our case a sock->sk will contain a valid sock. We check if socket needs to be bound to a ephemeral port, and then call sk->sk_prot->sendmsg(). During socket creation, the sock is added to the socket, and protocol handlers are registered to the sock. In this case, for a UDP socket, sk_prot is set to udp_prot (defined in net/ipv4/udp.c). And sk_prot->sendmsg is set to udp_sendmsg(). The arguments have not changed, we will pass sk and msghdr.

Till this point we have not begun constructing the packet, the focus was more on socket options. udp_sendmsg will first extract the destination address (usually variable daddr) and dest port (usually dport), from the msghdr->msg_name. The source port is extracted from the sock. This information is passed to find a route for the packet. The first time a packet is sent out of a sock, the route has to be found by going through the routing tables. This route result is saved in sk->sk_dst_cache, which is used for packets that are sent later. At this point the packet’s source address is extracted from the route. All the details about the packet’s flow are saved in struct flowi4, which are saddr, daddr, sport, dport, protocol, tos (type of service), sock mark, UID (user identifier), etc. We now have all the information, the addresses, ports and certain information to fill in the IP header with. We can begin filling in the packet. ip_make_skb() will create the skb, and the skb will be sent out by calling udp_send_skb().

int inet_sendmsg(struct socket *sock, struct msghdr *msg, size_t size)

{

inet_autobind(sk)

DECLARE_SOCKADDR(struct sockaddr_in *, usin, msg->msg_name);

daddr = usin->sin_addr.s_addr; // get daddr from msghdr

dport = usin->sin_port;

rt = (struct rtable *)sk_dst_check(sk, 0);

if (!rt) {

flowi4_init_output();

// pass daadr, dport...

rt = ip_route_output_flow(net, fl4, sk);

sk_dst_set(sk, dst_clone(&rt->dst));

//next time sendmsg is called, sk_dst_check() will return the rt

}

saddr = fl4->saddr; // route lookup complete, saddr is known

skb = ip_make_skb(); // create skb(s)

err = udp_send_skb(skb, fl4, &cork); // send it to ip layer

}

N3.3-3.4 alloc skb and send_skb

N3.3 Alloc skb, fill it up (OPTIONAL)

ip_make_skb() is called to create a skb and is provided flowi4, sk, msg ptr, msg length and route as the arguments. This is a generic function that can be called from any tranport layer, here UDP calls it and the argument tranhdrlen (transport header length) is equal to sizeof an udp header. Additionally, the length field is equal to the amount of data below the ip header, i.e. length contains payload length plus udp header length.

A skb queue head is inited, which contains a list of skbs. Note that a head itself DOES NOT contain data. It is a handle to a skb list. On init, the list is empty, with sk_buff_head->next equal to sk_buff_head->prev equal to its own address, and sk_buff_head->qlen is zero. Multiple skbs will be added to it if the msg size is greater than the MTU, forcing IP fragmentation. For now, we will ignore ip fragmentation, so a single skb will be added to it later.

Next __ip_append_data() is called to fill in the queue with the skb(s). The primary goal of __ip_append_data is to estimate memory necessary for the packet(s) and accordingly create and enqueue skb(s) into the queue. The skb needs memory necessary to accommodate:

- link layer headers: each device during init sets

dev->hh_len. Hardware header length (usually represented by varhh_len) is the maximum space the driver will need to fill in header(s) below the network header. e.g. ethernet header is added by ethernet drivers. - IP and UDP headers. The function also handles the case where the packet needs IP fragmentation. In that case, additional logic to allocate memory for fragmentation headers is necessary.

- Payload. Obviously.

Additionally some extra tail space is also provided while allocating the skb. The allocation logic is shown in the code below. Once the calculation is done, sock_alloc_send_skb() is called, which internally calls sock_alloc_send_pskb() to allocate the skb. Each skb must be accounted for in the sock where it was created (TX) or in the sock where it is destined to (RX). This is to control the memory used by packets. Each sock will have restrictions on the amount of memory it can use. In this case wmem, the amount of data written into the socket that hasn’t transmitted yet, is a constraint while allocating data. If wmem is full, sendmsg() call can get blocked (unless the socket is set to non blocking mode) till sock memory is freed. Once data is allocated, the udp payload data is written into the skb. skb->transport_header and skb->network_header are set. IP and UDP headers haven’t been filled yet, but pointers to where they have to be filled are set. The skb is added to the skb queue and finally sock wmem is updated.

Next __ip_make_skb() will fill in ip header. (ignore fragmentation code for now, which will run if the queue has more than one skb). Finally it returns the created skb’s pointer.

struct sk_buff *ip_make_skb()

{

struct sk_buff_head queue;

__skb_queue_head_init(&queue);

err = __ip_append_data()

{

hh_len = LL_RESERVED_SPACE(rt->dst.dev);

fragheaderlen = sizeof(struct iphdr) + (opt ? opt->optlen : 0);

// opt is NULL, fragheaderlen is equal to sizeof(struct iphdr)

datalen = length + fraggap; //fraggap is zero

// length = udphdr len + payload length

fraglen = datalen + fragheaderlen;

alloclen = fraglen;

skb = sock_alloc_send_skb(sk,

alloclen + hh_len + 15,

(flags & MSG_DONTWAIT), &err);// ------- Step 0

skb_reserve(skb, hh_len); // -------------------------- Step 1

data = skb_put(skb, fraglen + exthdrlen - pagedlen);

// exthdrlen & pagedlen are zero. --------------------- Step 2

skb_set_network_header(skb, exthdrlen);

skb->transport_header = (skb->network_header +

fragheaderlen); // --------------------- Step 3

data += fragheaderlen + exthdrlen; // ------------------ Step 4

// move pointer to where payload starts

copy = datalen - transhdrlen - fraggap - pagedlen;

// amount of payload data that needs to be copied.

// datalen = payload len + udp header len.

// transhdrlen = udp header len

getfrag(from, data + transhdrlen, offset, copy, fraggap, skb);

// getfrag is set to ip_generic_getfrag()

{ //copy and update csum

csum_and_copy_from_iter_full(to, len, &csum, &msg->msg_iter);

skb->csum = csum_block_add(skb->csum, csum, odd);

}

length -= copy + transhdrlen; // copied length is subtracted

skb->sk = sk;

__skb_queue_tail(queue, skb);

refcount_add(wmem_alloc_delta, &sk->sk_wmem_alloc);

}

return __ip_make_skb(sk, fl4, &queue, cork)

{

skb = __skb_dequeue(queue);

iph = ip_hdr(skb);

iph->version = 4;

iph->ihl = 5;

iph->tos = (cork->tos != -1) ? cork->tos : inet->tos;

iph->frag_off = df;

iph->ttl = ttl;

iph->protocol = sk->sk_protocol;

ip_copy_addrs(iph, fl4); // copy addresses from flow info

}

}

N3.3.1 (Older?) Skb allocation logic

The figure below which shows how pointers in skb meta data are being updated corresponding to steps commented in the code above. These pictures are from davem’s skb_data page which describes udp packet data being filled in a skb. This logic is very different from what I have described above. It is possible that this was the allocation logic earlier. It is entirely possible I have missed something. Comments are welcome.

3.4 UDP and IP send_skb

udp_send_skb() is simple, it fills in the UDP header, computes the checksum (csum), and sends the skb to ip_send_skb(). If ip_send_skb returns an error, SNMP value SNDBUFERRORS is incremented. And if everything goes well OUTDATAGRAMS is incremented.

ip_send_skb() calls ip_local_out(), which calls __ip_local_out() The packet then enters the OUTPUT chain, at the end of which dst_output() is called. On finding the packet’s route, skb_dst(dst)->output is set to ip_output.

The skb then enters the POSTROUTING chain, at the end of which ip_finish_output() is called. ip_finish_output() checks if the packet needs fragmentation (in certain cases, the packet might have modified or the packet route might change, which may require ip fragmentation). Ignoring the fragmentation, ip_finish_output2() is called.

transport layer (TCP/UDP)

🠗 __ip_local_out()

OUTPUT

INPUT 🠗 dst_output()

| |

ROUTING DECISION --- FORWARDING --- +

| |

PREROUTING 🠗 ip_output()

| POSTROUTING

🠗 ip_finish_output()

CORE NETWORKING

ip_finish_output2() first checks if the interface the packet is being routed out to has a corresponding neighbour entry (neigh). The neighbour subsystem is how the kernel manages link local connections corresponding to IP addresses. i.e. ARP to manage ipv4 addresses and NDISC for ipv6 addresses. If the next hop for an interface is not known, the corresponding messages are triggered, and is added to the corresponding cache. The current arp cache can be checked by printing /proc/net/arp. For now, the assuming the neigh can be found, we will proceed. neigh_output is called, which calls neigh_hh_output(). (An output function is registered in the neigh entry, which will be called if the neigh entry has expired. Ignoring this for now.)

neigh_hh_output() adds the hardware header necessary into the headroom. The neigh entry contains a cached hardware header, which is added while adding a neigh entry into the neigh cache (after a successful ARP resolution or neighbour discovery) is complete. More of this will be covered in a separate page covering the neigh subsystem.

Now the skb has all necessary headers, pass it to the core networking subsystem which will let the driver send the packet out.

static int ip_finish_output2()

{

neigh = __ipv4_neigh_lookup_noref(dev, nexthop);

neigh_output(neigh, skb)

{

hh_alen = HH_DATA_ALIGN(hh_len); //align hard header

memcpy(skb->data - hh_alen, hh->hh_data,

hh_alen);

}

__skb_push(skb, hh_len); // add the hh header

return dev_queue_xmit(skb);

}

N3.5-3.8 NET_TX and driver xmit

N3.5 Core networking

The current section will cover the simplest case of sending out a packet. NET_TX softirq will not be scheduled, in most cases packets will be sent out this way.

Every real network device on creation has atleast one TX queue (var “txq”). By real I mean actual physical devices: ethernet or wifi interfaces. Virtual network devices like loopback, tun interfaces, etc are “queueless”, i.e. they have a txq and a default Queue Discipline (qdisc), but a function is not added to enqueue packets.

Run “tc qdisc show dev lo”, and it should show “noqueue” as the only queue. Other real devices have queues with specific properties, as shown below eth0 has a fq_codel queue. I’ll add a separate page for qdiscs, for now ignore them.

$ tc qdisc show dev lo

qdisc noqueue 0: root refcnt 2

$ tc qdisc show dev eth0

qdisc fq_codel 0: root refcnt 2 limit 10240p flows 1024 quantum 1514 target 5.0ms

interval 100.0ms memory_limit 32Mb ecn

A small optional section on how loopback’s xmit works is added next. For now assume our device has a queue.

__dev_queue_xmit() finds the tx queue and qdisc and if a enqueue function is present, calls __dev_xmit_skb() which calls the function to enqueue the skb into the qdisc. At this point the skb is in the queue. We move forward without any skb pointer held. After enqueueing the skb, __qdisc_run() is called to process packets (if possible) that have been enqueued. If no other process needs the cpu and less than 64 packets have been processed in the current context, __qdisc_run() will continue processing packets. __qdisc_run calls qdisc_restart() internally, which dequeues skbs from the queue and calls sch_direct_xmit(), which calls dev_hard_start_xmit() and it finally calls xmit_one(). xmit_one() will transmit one skb from the queue.

__dev_queue_xmit()

{

struct netdev_queue *txq;

struct Qdisc *q;

txq = netdev_pick_tx(dev, skb, sb_dev);

q = rcu_dereference_bh(txq->qdisc);

rc = __dev_xmit_skb(skb, q, dev, txq)

{

rc = q->enqueue(skb, q, &to_free) & NET_XMIT_MASK;

__qdisc_run(q)

{

//while constraints allow

qdisc_restart(q, &packets)

{

skb = dequeue_skb(q);

sch_direct_xmit(skb);

}

}

qdisc_run_end(q);

}

}

N3.6 NET_TX (OPTIONAL)

Ironic that the article on NET_TX has this section marked as OPTIONAL. But in simple scenarios, NET_TX softirq is almost never raised. After enqueueing the packet, __qdisc_run() cannot process packets because if one of these conditions is not met:

- no other process is waiting for this CPU

- the current process has enqueued less than 64 packets.

Then a NET_TX is scheduled to run. __netif_schedule() will raise a NET_TX softirq if it was not already triggered on the CPU. net_tx_action(), the registered function will run __qdisc_run() on the qdisc, after which the flow is same as the case without a softirq raised.

Though we have not covered NAPI yet, net_tx_action() is a dual of net_rx_action(), a root qdisc is a dual of a napi structure, and xmit_one() is a dual of __netif_receive_skb(), with very similar logic but in opposite directions.

N3.7 driver xmit_one()

xmit_one() is the final function, which like __netif_receive_skb() shares the packet with all registered promiscuous packet_types, i.e. the global list ptype_all and per netdevice list dev->ptype_all. This is done within dev_queue_xmit_nit() function. After this, xmit_one calls netdev_start_xmit() which internally calls __netdev_start_xmit() to hand over the packet to the driver by calling ops->ndo_start_xmit() (ndo stands for NetDevice Ops). A net_device_ops struct is registered during netdevice creation, where this function pointer is set by the driver. The driver will then send the packet out via the physical interface.

sch_direct_xmit() -> dev_hard_start_xmit() -> xmit_one()

{

dev_queue_xmit_nit();

//deliver skb to promisc packet types

netdev_start_xmit()

{

const struct net_device_ops *ops = dev->netdev_ops;

return ops->ndo_start_xmit(skb, dev);

}

}

N3.8 loopback xmit (OPTIONAL)

Like described earlier, each device during creation registers certain functions using the net_device_ops structure. Loopback registers loopback_xmit as the function. On sending a packet to loopback, when ops->ndo_start_xmit is called, the packet enters loopback_xmit(). It is a very simple function, which increments stats and calls netif_rx_ni() to begin the RX path of the packet. The end of TX coincides with the start of RX in this function.

The loopback device is described in drivers/net/loopback.c .

loopback_xmit()

{

skb_tx_timestamp(skb);

skb_orphan(skb); // remove all links to the skb.

skb->protocol = eth_type_trans(skb, dev); // set protocol

netif_rx(skb); //RX!!

}

N4. (WIP) Socket Programming BTS

THIS IS A WORK IN PROGRESS, Parts of it may be incomplete or incorrect.

This page describes the magic that happens in the kernel behind the scenes (BTS) while running a server and client exchanging data over TCP. A small introduction with the basics of TCP has been added, a few optional sections describing the internal structure of networking sockets, socket memory accounting, time wait sockets have been added. The TCP subsystem is not described, it requires a dedicated article, this article only mentions the basic functions where necessary. Hereon the application sending the data is the server and one receiving it is the client. Ofcourse, both the applications can be server and client by sending and receiving simultaneously.

N4.1 Basics of TCP

Please skip this section if you have a fair idea how basic TCP operates.

TCP provides reliable, ordered and error-checked delivery of octets. The server divides the data into smaller segments and assigns each of them a sequence number and sends it out. The client sends back an acknowledgement for each sequence received. Reliability is achieved by tracking acknowledgements and retransmitting segments if necessary. The receiver re-assembles the packets using the sequence numbers so the application receives it in the right order. Finally checksum is used to verify the integrity of the data. The application actually knows nothing of how the packets are sent and received, the kernel works all the TCP magic in the background. (Which is why this is a Behind The Scenes Article).

Before TCP begins transmitting the data, the server and client connect to each other and exchange a few parameters. The server begins by binding to a particular port. The client sends a request to the server, a SYN (short for synchronize) packet. (A tcp packet with the SYN flag set in the header is called a SYN packet). The server responds with a SYN packet which also acknowledges the packet sent by the client, i.e. SYN-ACK packet (ACK is short for acknowledgement). The client on receiving the SYN-ACK acknowledges the SYN sent by the server with an ACK. The exchange of these three packets ( SYN, SYN-ACK and ACK) establishes a connection between the server and the client. The connection on both the sides is uniquely identified by the following four tuple: (saddr, daddr, sport, dport) which are short for ( source IP address, destination IP address, source port, destination port) respectively.

Connection termination also happens with the exchange of packets between the server and client. A FIN is sent by the initiator, to which the peer responds with a FIN-ACK (acknowledging the initiator’s FIN). The connection closes with the initiator acknowledging the FIN-ACK.

SERVER CLIENT

fd1 = socket()

listen(fd1, N)

fd3 = accept(fd1) {

fd2 = socket()

connect(fd2) {

<<---- SYN ------

---- SYN-ACK --->> }

} <<---- ACK ------

send(fd3, DATA)

----- DATA --->>

<<--- DATA -----

recv(fd2, DATA);

close(fd2);

<<---- FIN ------

close(fd3); ---- FIN-ACK -->>

<<---- ACK ------

N4.2 socket(int domain, int type, int protocol

Both applications create networking sockets using the socket() system call. The arguments are socket family, socket type and protocol type. The man page describes possible values the arguments take. AF_INET and AF_INET6 are to create a IPv4 and IPv6 sockets respectively. Some of other options are to create UNIX sockets for Inter process communication, NETLINK sockets for monitoring kernel events and communicating with kernel modules, AF_PACKET sockets to capture packets, AF_PPPOX sockets for creating PPP tunnels, etc.

There are two major Socket types:

- SOCK_STREAM: used to send/recv octet streams. For example TCP socket is a STREAM sock. Stream sockets guarantee reliable in order delivery after a two way connection is established. It does not preserve message boundaries. i.e. if the server writes 40 bytes first and then 60 bytes, the client may receive all the 100 bytes in one shot, never knowing that the server wrote it in two parts. Usually both sides agree upon a fixed boundary that is used to detect message boundaries. For example HTTP, which operates over TCP uses “\r\n” (CRLF: Carriage Return Line Feed) as a boundary. See the wiki page describing the HTTP Message format.

- SOCK_DGRAM: Datagram are the exact opposite of SOCK_STREAM, they are connectionless, delivery is unreliable. But message boundaries are preserved, i.e. in the prev example, the client would receive two messages 40 and 60 bytes long (if they were not dropped on the way). An example is UDP.

Protocol field, usually zero, is used if there are multiple protocols for a specific (family, protocol) set. For example both SCTP and TCP both offer SOCK_STREAM services within AF_INET family, UDP and ICMP offer DGRAM services within the AF_INET family. TCP is the default option, so a socket call with family AF_INET, type STREAM_SOCK and protocol set to zero will initialize a TCP socket. Providing IPPROTO_SCTP instead of zero will create a SCTP socket. Explicitly setting IPPROTO_TCP will also create a TCP.

The socket() system call internally calls __sys_socket(), which has two parts to it, firstly it creates a socket and a networking socket (struct sock) and initializes them. The second part is to create provide a fd to the application as a handle to the socket. __sys_socket() first calls sock_create(), which internally calls __sock_create(). __sock_create() checks the input values. It then checks if the application has permissions needed to create the socket. For example packet sockets can be created only by applications with admin privileges. Simple sockets like TCP & UDP dont need any special permissions. Next it allocates a socket. Based on the family, the corresponding create function is called. All protocol families are registered during initialization by calling sock_register(). They can be accessed via the global variable net_families[]. In this case, family AF_INET has the structure inet_family_ops registered, and the create function inet_create() is called.

inet_create() searches among the registered struct proto which corresponds to the protocol input. On finding the protocol sk_alloc() is called to create the corresponding protocol sock, and the registered protocol init is called, in this tcp_v4_init_sock(). The structure of the sock is described below, an optional section. The socket is a BSD socket, while the sock is a networking socket which handles all the protocol functionality and stores the corresponding data. For example, in TCP the tcp sock maintains queues to track packets that have been sent but not yet acknowledged by the peer. Once sock init is done, control returns to __sys_socket().

__sys_socket() then assigns a fd to the socket that was created, and returns this to the application. Any interaction with the network sock will go through the socket. System calls will call the socket call, using the fd the corresponding sock will be found, after which the corresponding protocol function will be invoked. sock->sk points to the sk and sk->sk_socket points to sock. This way knowing one, we can reach the other.

__sys_socket() -> sock_create()

{

sock_create() -> __sock_create()

{

sock = sock_alloc();

sock->type = type;

pf = rcu_dereference(net_families[family]);

err = pf->create(net, sock, protocol, kern);

{

struct inet_protosw *answer;

struct proto *answer_prot;

list_for_each_entry_rcu(answer, &inetsw[sock->type], list) {

//find the right protocol.

if (protocol == answer->protocol) {

break;

}

}

sock->ops = answer->ops; // &inet_stream_ops

answer_prot = answer->prot; // tcp_prot

sk = sk_alloc(net, PF_INET, GFP_KERNEL, answer_prot, kern);

sock_init_data(sock, sk);

sk->sk_protocol = protocol;

sk_refcnt_debug_inc(sk);

err = sk->sk_prot->init(sk);

}

}

return sock_map_fd(sock, flags & (O_CLOEXEC | O_NONBLOCK));

// find a unused fd and bind it to the socket

}

The flow after calling a socket call usually follows the following pattern:

- syscall entry, socket lookup based on fd.

- check if the process has the necessary security permissions

- call the corresponding function from sock->ops

- get sock from the socket, call the function from sk->ops

N4.3 socket, sock, inet_sock, inet_connection_sock and tcp_sock (OPTIONAL)

TODO: Why two parts: socket and sock ? is a socket without sock possible ?

N4.4 bind(int sockfd, const struct sockaddr *addr, socklen_t addrlen)

TCP bind is called by server, which provides services on a well known port, to which the client connects to. The client may also bind, but usually the client’s port is not of importance, so a bind() call is seldom made.

The usual flow, enter syscall, find the socket from fd, call the bind function from the ops, which is inet_bind(). Then, the get the sk from the sock, call sk->sk_prot->get_port(). In case of tcp it points to inet_csk_get_port(). The get_port() takes two arguments, sk and port num, and returns 0 if that port was available and was assigned to sk, and returns 1 if binding the port was not possible.

int __sys_bind(fd, sockaddr, int)

{

sock = sockfd_lookup_light(fd, &err, &fput_needed);

sock->ops->bind(sock, sockaddr, addrlen); // inet_bind()

{

struct sock sk = sock->sk

return __inet_bind(sk, uaddr, addr_len)

{

struct inet_sock *inet = inet_sk(sk);

lock_sock(sk); //lock sock

snum = ntohs(addr->sin_port);

if (sk->sk_prot->get_port(sk, snum))

// inet_csk_get_port()

{

err = -EADDRINUSE;

return err;

}

inet->inet_sport = htons(inet->inet_num);

// set src port

release_sock(sk); //unlock

}

}

}

The TCP subsystem maintains a hash table with a list of hashbuckets corresponding to each hash. Each bucket contains a list of sk which are bound to a port. First, it checks if a bucket exists for the port requested. If it does not, a new one is added and the sk is added ot the bucket. If the bucket exists, and if the certain conditions are satisfied (see the next paragraph), the sk is added to the bucket, and the bind is successful.

/* check tables if the port is free */

inet_csk_get_port(sk, port)

{

struct inet_hashinfo *hinfo = sk->sk_prot->h.hashinfo; // hash tables

struct inet_bind_hashbucket *head; // a bucket

struct inet_bind_bucket *tb = NULL;

int ret = 1;

if (!port) {

head = inet_csk_find_open_port(sk, &tb, &port);

// port is zero, assign a unused port

}

head = &hinfo->bhash[inet_bhashfn(net, port,

hinfo->bhash_size)];

//calc hash and find the right bucket head

spin_lock_bh(&head->lock);

inet_bind_bucket_for_each(tb, &head->chain) //search in the bucket

if (tb->port == port) //exact bucket found

goto tb_found;

// if nothing is found, this is the first sock to use the port

tb = inet_bind_bucket_create(hinfo->bind_bucket_cachep,

net, head, port);

tb_found:

if (!hlist_empty(&tb->owners)) { // bucket has sk, i.e. port is being used

if (sk->sk_reuse == SK_FORCE_REUSE)

goto success;

// see the explanation above inet_bind_bucket def by DaveM,

// which expains the below function's checks

if (inet_csk_bind_conflict(sk, tb, true, true))

goto fail_unlock;

}

success:

if (!inet_csk(sk)->icsk_bind_hash)

inet_bind_hash(sk, tb, port)

{

inet_sk(sk)->inet_num = snum;

sk_add_bind_node(sk, &tb->owners);

// add sk to bucket

inet_csk(sk)->icsk_bind_hash = tb; // update pointer

}

ret = 0;

fail_unlock;

spin_unlock_bh(&head->lock);

return ret;

}

Multiple sockets can be bound to a single port, both TCP and UDP use it to allow mutliple processes to share a port. All applications that want to reuse the port should us the reuseport socket option (SO_REUSEPORT) to allow sharing the port. See man page socket(7), about the use of SO_REUSEPORT, where possible use cases are also described. TCP (in Linux) has a unique way of allowing multiple sockets to share a port, this has been added as a comment in “include/net/inet_hashtables.h”, which have added below. This logic is implemented in inet_csk_bind_conflict().

/* There are a few simple rules, which allow for local port reuse by

* an application. In essence:

*

* 1) Sockets bound to different interfaces may share a local port.

* Failing that, goto test 2.

* 2) If all sockets have sk->sk_reuse set, and none of them are in

* TCP_LISTEN state, the port may be shared.

* Failing that, goto test 3.

* 3) If all sockets are bound to a specific inet_sk(sk)->rcv_saddr local

* address, and none of them are the same, the port may be

* shared.

* Failing this, the port cannot be shared.

*

* The interesting point, is test #2. This is what an FTP server does

* all day. To optimize this case we use a specific flag bit defined

* below. As we add sockets to a bind bucket list, we perform a

* check of: (newsk->sk_reuse && (newsk->sk_state != TCP_LISTEN))

* As long as all sockets added to a bind bucket pass this test,

* the flag bit will be set.

* The resulting situation is that tcp_v[46]_verify_bind() can just check

* for this flag bit, if it is set and the socket trying to bind has

* sk->sk_reuse set, we don't even have to walk the owners list at all,

* we return that it is ok to bind this socket to the requested local port.

*

* Sounds like a lot of work, but it is worth it. In a more naive

* implementation (ie. current FreeBSD etc.) the entire list of ports

* must be walked for each data port opened by an ftp server. Needless

* to say, this does not scale at all. With a couple thousand FTP

* users logged onto your box, isn't it nice to know that new data

* ports are created in O(1) time? I thought so. ;-) -DaveM

*/

N4.5 [Server] listen(int sockfd, int backlog)

After opening a socket and binding to a port, the server calls the listen() call. It is signal to the kernel that the application is now ready to accept connections. The kernel initializes the necessary data structures, so SYN packets can be received. This will be explained in the accept call. The code flow is the standard one, finally calling sk->sk_prot->listen(), the registered function is inet_listen().